Regulation, misinformation and COVID19

- by William Perrin, Trustee, Carnegie UK Trust

- 30 April 2020

- 6 minute read

As we all pull together to combat COVID19, social media companies deserve some praise for their attempts to reverse the malign effects of some of their products and for stepping up to help national COVID19 communications. Some of the measures the companies have taken are a little unusual and there is limited independent verification that they are effective, but the big tech firms are trying. It won’t just be senior management at the tech companies but a profound effort by all their staff to make their products less dangerous and we should praise them too.

Decisions by social media companies about how their services are designed and operated have a direct and indirect effect on whether outcomes for users are harmful or not. Pinterest for instance took a decision last year not to have anti-vaxx material on their platform so, when the COVID19 crisis broke, Pinterest had very clear community guidelines on content that might have “immediate and detrimental” effects to health or public safety. So anyone searching for coronavirus on Pinterest was met with the message:

“Pins about this topic often violate our community guidelines, which prohibit harmful medical misinformation. Because of this we’ve limited search results to pins from internationally recognised health organisations”.

Other platforms chose not to do follow Pinterest’s example and pre COVID19 appeared to either care little about the spread of anti-vaxx messages, or devote few resources to enforcing their rules on this material. For instance, it only took three days from the start of the first UK COVID-19 vaccine trials to the spread of viral, fake news about it on social media. On a Thursday, Elisa Granato took part in the trials in Oxford. On the following Sunday, an online story that she had died was rebutted by the BBC’s health correspondent, Fergus Walsh, who reported that “links to the article were posted in a number of Facebook groups which oppose vaccination. The claim has circulated in several languages. Some of the posts have now been removed”.

Companies are having to make COVID19 changes to their services in part because they had designed and operated them for years to increase the velocity of information sharing and to make it an almost thoughtless, unconscious act to ‘post’. WhatsApp during COVID19 has deployed its “velocity limiter” to reduce the number of times things can be forwarded having seen “a significant increase in the amount of forwarding” during the crisis. This is a method Whatsapp first used in India two years ago when its service was horrifyingly linked to a series of lynchings based on false messages being shared at high speed. WhatsApp reported this week that the deployment of the limiter has led to a 70% reduction in ‘highly forwarded’ messages on its services. While there is no way of verifying the impact, this appears a welcome step in the right direction, although it still leaves plenty of scope for forwarding.

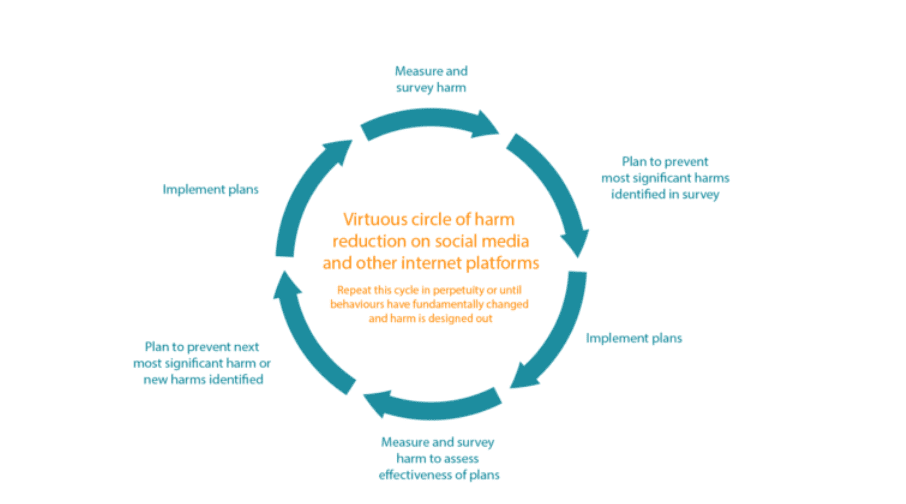

We have long argued that the UK Government’s online harms proposals need to take a systemic approach to regulation; biting at the platform design level rather than at the level of individual pieces of content. The design choices that lead to the viral spread of disinformation or misleading information on coronavirus – ease of liking, sharing or forwarding; algorithmic promotion of sensationalist content; lack of transparent community guidelines or enforceable terms and conditions; failure to address effectively the proliferation of fake accounts or botnets – are the same that lead to the prevalence of online scams, the intimidation and harassment of public figures, or the possible subversion of electoral and democratic processes. The durability of the systemic statutory duty of care model is demonstrated by COVID19 itself – neither the lengthy codes of practice on online harm detailed by the UK Government, nor our own work mentioned the concept of disinformation relating to a pandemic – yet if a duty of care had been in place, it would have caught this problem.

The COVID19 crisis has hit at the time when many democracies are thinking about how to regulate social media effectively. The UK has advanced proposals based in part on our work at Carnegie. The new Secretary of State Oliver Dowden and Minister of State Caroline Dinenage have had their first in-post meetings with the tech companies in a context where the companies are bucking a behavioural trend and taking concrete, real time steps to reduce harm. Mr Dowden has taken care to praise the tech companies’ work.

Huge media groups have a significant role when information and behaviour is central to a national emergency. The Secretary of State is fighting a profound crisis – he needs results immediately – and those will be delivered quicker by rolling with the newfound willingness of the companies to take action. Without a regulatory system, the Secretary of State’s only lever is corporate goodwill. In Parliament though, the pressure for regulation continues – Mr Dowden’s colleague, the DCMS Lords Minister Baroness Barran came under strong, almost un-Lordly pressure in the House yesterday. The Minister, a storied finance executive and charity campaigner, skilfully responded that the companies had done much but that more was expected of them. The social media companies with their vast lobbying operations will have noted this juxtaposition of their voluntary efforts viz a viz pressure to bring regulation forwards.

Social media companies will know that by demonstrating what they can do, they risk undermining previous arguments about the challenges in taking certain steps, and these actions therefore being discounted into regulation. But it’s encouraging that bit by bit they are doing the right thing. The companies will also hope that this adds to the ‘good’ side of the register – in their most optimistic scenario planning they might hope to see off regulation altogether by proving that they can step up to the plate. As we all move to some kind of a ‘new normal’ perhaps we can expect sombre messages from social media executives telling a great American redemption story ‘Look we learned and changed, you can trust us now, put regulation to one side’ as the Wall Street Journal noted yesterday.

What we have seen so far though suggests only palliative measures, barely touching the systemic issues that enable online harm. There is little independent verification of outcomes, no sanctions and opaque processes. It’s taken a generation-defining crisis to see concerted action by social media platforms to tackle the harm caused by their design choices on a single issue, but there is still far more to do. As we move to living with COVID19 the UK Government and parliament should create a new normal for tackling online harm, locking in and going beyond the commendable actions of social media companies on COVID19 – by delivering systemic regulation through a statutory duty of care enforced by an independent, empowered OFCOM.

Help us make the case for wellbeing policy

Keep in touch with Carnegie UK’s research and activities. Learn more about ways to get involved with our work.

"*" indicates required fields