Internet Harm Reduction: a Proposal

- by Professor Lorna Woods and William Perrin

- 30 January 2019

- 8 minute read

This proposal was developed by Professor Lorna Woods, University of Essex and William Perrin a Trustee of Carnegie UK Trust. Lorna and William each have over 20 years of experience of regulatory policy and practice across a range of regimes. The following is a summary of our publications during 2018-2019 which are informed by our extensive research and meetings with many companies, civil society actors, government, legislators and regulators.

We have designed a new regulatory regime to reduce harm to people from internet services. We propose a completely new regime that draws from the experience of regulating in the public interest for externalities and harms to the public in sectors as diverse as financial services and health and safety. Our approach is systemic, rather than palliative action on harms.

At the heart of the new regime would be a new ‘duty of care’ set out by Parliament in statute. This statutory duty of care would require most companies that provide social media or online messaging services used in the UK to protect people in the UK from reasonably foreseeable harms that might arise from use of those services. This approach is risk-based and outcomes-focused. A regulator would ensure that companies delivered on their statutory duty of care and it would have sufficient powers to drive compliance.

Duty of care

Social media service providers should each be seen as responsible for a public space they have created, much as property owners or operators are in the physical world. Everything that happens on a social media service is a result of corporate decisions: about the terms of service, the software deployed and the resources put into enforcing the terms of service.

In the physical world, Parliament has long imposed statutory duties of care upon property owners or occupiers in respect of people using their places, as well as on employers in respect of their employees. Variants of duties of care also exist in other sectors where harm can occur to users or the public. A statutory duty of care is simple, broadly based and largely future-proof. For instance, the duties of care in the 1974 Health and Safety at Work Act still work well today, enforced and with their application kept up to date by a competent regulator. A statutory duty of care focuses on the objective – harm reduction – and leaves the detail of the means to those best placed to come up with solutions in context: the companies who are subject to the duty of care. A statutory duty of care returns the cost of harms to those responsible for them, an application of the micro-economically efficient ‘polluter pays’ principle. The E-Commerce Directive permits duties of care introduced by Member States.

Parliament should guide the regulator with a non-exclusive list of harms for it to focus upon. These should be: the stirring up offences including misogyny, harassment, economic harm, emotional harm, harms to national security, to the judicial process and to democracy. Parliament has created regulators before that have few problems in arbitrating complex social issues and these harms should not be problematic for the regulator. Some companies would welcome the guidance.

Who would be regulated?

The harms set out by the UK government in its 2017 Green Paper and by civil society focus on social media and messaging services, rather than basic web publishing. We exclude from the scope of our proposals those online services already subject to a comprehensive regulatory regime – notably the press and broadcast media. Messaging services have evolved far beyond simple one-to-one services. Many messaging services now have large group sizes and semi or wholly public groups. We propose regulating services that:

- Have a strong two-way or multiway communications component;

- Display user-generated content publicly or to a large member/user audience or group.

The regime would cover reasonably foreseeable harm that occurs to people who are users of a service and reasonably foreseeable harm to people who are not users of a service.

Our proposals do not displace existing laws – for example, the regulatory regime overseen by the Advertising Standards Authority, nor the soon to be published Age Appropriate Design Code under the Data Protection Act 2018, nor the Audio Visual Media Services Directive which will affect some video content, nor defamation as used recently by Martin Lewis in a case against Facebook.

Duty of care – risk-based regulation

Central to the duty of care is the idea of risk. If a service provider targets or is used by a vulnerable group of users (e.g. children), the risk of harm is greater and service provider should have more safeguard mechanisms in place than a service which is, for example, aimed at adults and has community rules agreed by the users themselves (not imposed as part of ToS by the provider) to allow robust or even aggressive communications.

Regulation in the UK has traditionally been proportionate, mindful of the size of the company concerned and the risk its activities present. Small, low-risk companies should not face an undue burden from the proposed regulation. Baking in harm reduction to the design of services from the outset reduces uncertainty and minimises costs later in a company’s growth.

How would regulation work?

The regulator should be one of the existing regulators with a strong track record of dealing with global companies. Giving the responsibility to an existing regulator allows regulation to start promptly, rather than being delayed by a three year wait for the establishment of an entirely new body, by which time the game might be up. We favour OFCOM as the regulator, funded on a polluter pays basis by those it regulates either through a levy or the forthcoming internet services tax.

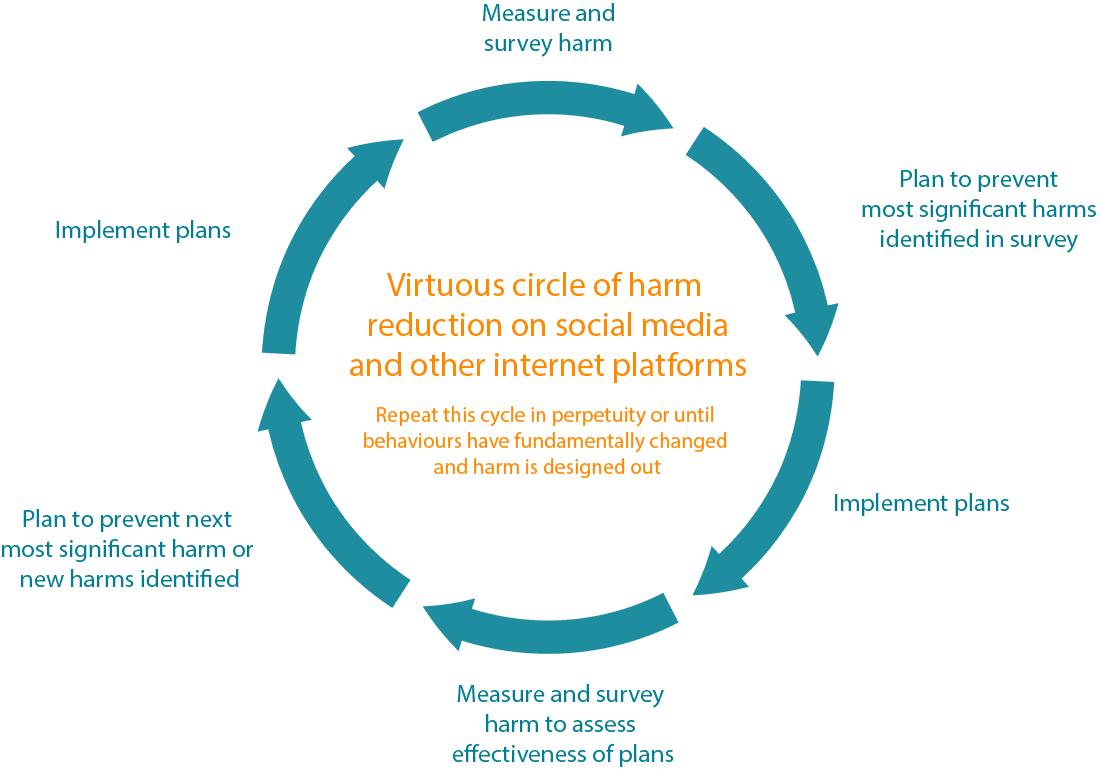

The regulator should be given substantial freedom in its approach to remain relevant and flexible over time. We know that harms can be surveyed and measured as shown by OFCOM and the ICO in their survey work of September 2018. We suggest the regulator employ a harm reduction method similar to that used for reducing pollution: agree tests for harm, run the tests, the company responsible for harm invests to reduce the tested level, test again to see if investment has worked and repeat if necessary. If the level of harm does not fall or if a company does not co-operate then the regulator will have sanctions.

In a model process, the regulator would work with civil society, users, victims and the companies to determine the tests and discuss both companies harm reduction plans and their outcomes. The regulator would have the power to request information from regulated companies as well as having its own research function. The nature of fast-moving online services is such that the regulator should deploy the UK government’s formalised version of the precautionary principle, acting on emerging evidence rather than waiting years for full scientific certainty about services that have long since stopped. The regulator is there to tackle systemic issues in companies and, in this proposal, individuals would not have a right of action to the regulator or the courts under the statutory duty of care.

What are the sanctions and penalties?

The regulator needs effective powers to make companies change behaviour. We propose large fines set as a proportion of turnover, along the lines of the GDPR and Competition Act regimes. Strong criminal sanctions have to be treated cautiously in a free speech context, in particular applying S23 of the Digital Economy Act that allows a service to be cut off by UK ISPs. We have also made suggestions of powers that bite on Directors personally, such as fines given the way charismatic founders continue to be involved in running social media companies.

Timing

The industry develops fast and urgent action is needed. This creates a tension with a traditional deliberative process of forming legislation and then regulation. We would urge the government to find a route to act quickly and bring a duty of care to bear on the companies as fast as possible. There is a risk that if we wait three or four years the harms may be out of control. This isn’t good for society, nor the companies concerned.

Next steps

We continue to welcome feedback and comments on our proposals and to work collaboratively with other organisations seeking to achieve similar outcomes in this area. Contact us via: [email protected]

For further information on the above, and the background to our thinking as it emerged and evolved see the resources at Carnegie UK Trust.

Help us make the case for wellbeing policy

Keep in touch with Carnegie UK’s research and activities. Learn more about ways to get involved with our work.

"*" indicates required fields