International Co-operation on Platform Governance

- by Professor Lorna Woods (University of Essex, Professor of Internet Law)

- 7 November 2019

- 4 minute read

Lorna Woods, Professor of Internet Law at the University of Essex and co-author of the Carnegie UK Trust’s proposals for Harm Reduction on Social Media will today give evidence at the International Grand Committee on Disinformation and Fake News in Dublin. The Committee, formed of Parliamentarians from eleven countries, is meeting for the third time, having previously convened in London and Ottawa. The focus of the Dublin session is on “advancing international collaboration in the regulation of harmful content, hate speech and electoral interference online” and takes place a day after a workshop on the theme of “international co-operation on platform governance”, facilitated by CIGI and at which both Lorna Woods and Will Perrin participated in panel discussions.

In her written statement to the Grand Committee, Lorna Woods set out the following perspective on the theme of international collaboration:

To work effectively together, Parliamentarians should develop a common language not just about the problems that exist but also possible mechanisms for moving towards a solution.

Two of the main difficulties when considering action in relation to online disinformation and fake news are scale and context of the content involved. Some platforms have an almost unimaginable amount of content uploaded and shared per second in different languages and with meanings that may have specific relevance for particular groups. Dealing with this situation on the basis of individual items of content is difficult.

An alternative way of considering solutions, and one proposed by the Carnegie UK Trust work in this area, is to look not at the content itself, but at the underlying systems which allow content to be shared and specifically how design and business choices affect our information environment. Social media platforms (as well as other service providers in the internet distribution chain) are not content-neutral. Whether or not they were intended so to do, they encourage and reward some content over other items of content. Some platforms – because of their design features – seem to have a greater problem with fake news than others. Some of this may be about size but it may also be about design choices: for example, the ease with which stories are forwarded on, or embedded from another source in the process decontextualising the material; the prioritisation of ‘click bait’ and stories stoking outrage. Looking at the information system behind the content focusses attention of the mechanisms by which disinformation spread, which to a large extent remain constant from jurisdiction to jurisdiction and over time, rather than questions of individual content which change frequently and also raises the question of what particular content means and whether it is true or not.

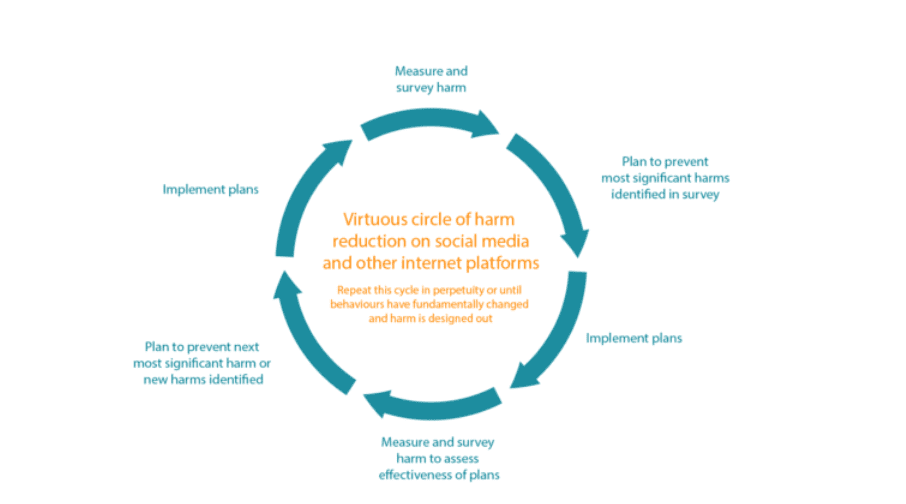

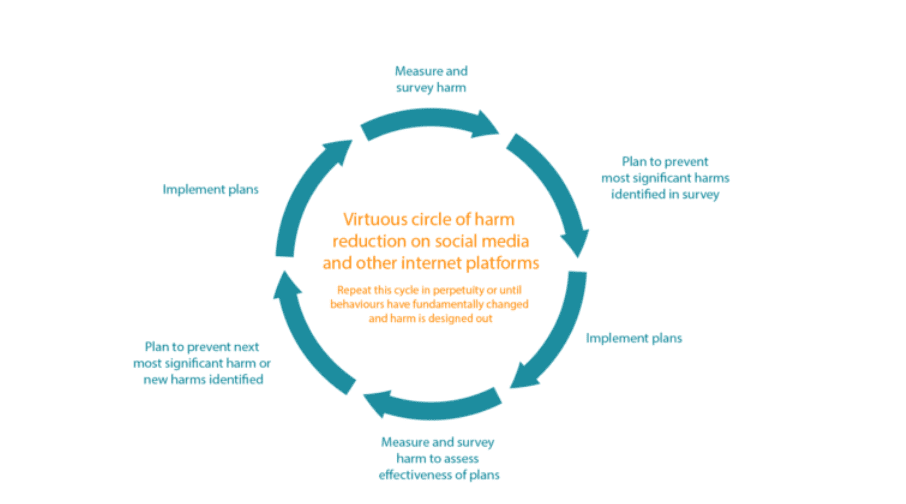

The proposal put forward by the Carnegie UK Trust is for a statutory duty of care. The duty of care is a process-based obligation orientated towards the reduction of harm on the Internet. The obligation is to consider the effect of the services/tools that are being offered and how they are being used, especially bearing in mind their design features. Where harm is or becomes likely as a result of the service, the obligation on the service provider is to take steps to mitigate. The existence of ‘problem content’ is an indicator of a process problem, but ultimately success is measured by reference to care in design, development and maintenance of the service not by the existence of particular items of content. The use of the duty of care model is not a ‘silver bullet’ and there may well be instances when additional, targetted action is needed.

A process-focussed duty at systems level has advantages in the international context of disinformation on the internet:

- it mitigates against concerns about scale

- it minimises risks and difficulties arising from understanding meaning and context in different environments; and

- it thus allows a single, common approach to be taken internationally.

The International Grand Committee will hold a press conference on its conclusions at the end of its Dublin session and we look forward to continuing to collaborate with international partners on this agenda, in addition to advancing our work in the UK to influence the development of online harms.

Help us make the case for wellbeing policy

Keep in touch with Carnegie UK’s research and activities. Learn more about ways to get involved with our work.

"*" indicates required fields